AMD’s AI Ambitions with Instinct MI300X

The world of artificial intelligence is a battleground where tech giants relentlessly compete to outpace each other. AMD, a notable player in the semiconductor industry, has openly admitted that its latest AI accelerator, the Instinct MI300X, still trails behind Nvidia’s H100 Hopper. This acknowledgment sheds light on the intense competition and innovation driving this dynamic field. In this blog post, we’ll explore the significance of AI accelerators, the advancements of AMD’s Instinct MI300X, and why Nvidia continues to hold its lead. For tech enthusiasts and industry professionals alike, understanding these developments is crucial in navigating the future landscape of AI technology.

Unveiling the World of AI Accelerators

AI accelerators are specialized hardware designed to speed up AI processes, making them crucial in fields ranging from autonomous vehicles to data analytics. Their role is to handle complex computations more efficiently than traditional CPUs, thereby reducing the time it takes to train AI models. The demand for these accelerators has surged as AI applications become more widespread, highlighting their importance in modern technology.

While CPUs and GPUs have traditionally driven AI workloads, AI accelerators are becoming increasingly indispensable. They are tailored to handle specific tasks, such as matrix multiplications and neural network processing, which are foundational to AI operations. This specialization allows accelerators to perform at higher speeds and with greater efficiency, a critical factor as AI models grow in complexity and size.

The competition between AMD and Nvidia is a testament to the value of AI accelerators. Both companies have invested heavily in developing hardware that can push the boundaries of AI capabilities. Their rivalry has spurred innovation, leading to the creation of more powerful and efficient accelerators that are reshaping the AI landscape.

The Rise of AMD’s Instinct MI300X

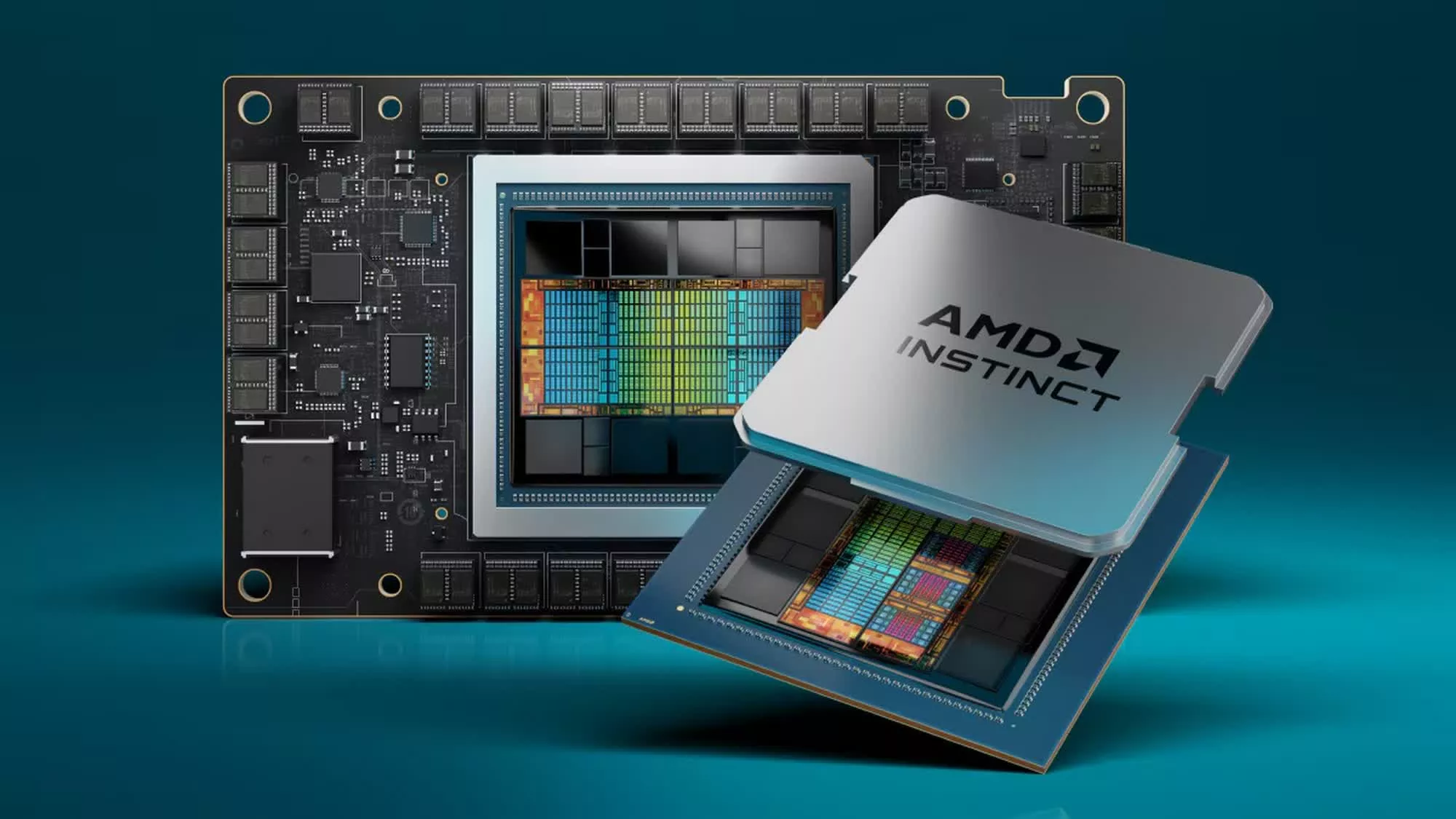

AMD’s Instinct MI300X represents a significant step forward in the company’s AI ambitions. Designed as a high-performance AI accelerator, it features a range of technological advancements aimed at enhancing AI workloads. The MI300X is built to handle large-scale computations, a necessity for training sophisticated AI models.

One of the key features of the MI300X is its integration of advanced GPU technologies. These enhancements allow it to provide substantial computational power, which is essential for processing complex AI algorithms. Additionally, the MI300X is designed to be energy-efficient, a critical factor for large-scale data centers where energy consumption is a major concern.

Despite these advancements, AMD acknowledges that the MI300X still falls short of matching Nvidia’s H100 Hopper. This admission highlights the challenges involved in developing cutting-edge AI hardware. It also reflects the relentless pace of innovation in the tech industry, where even the slightest edge can determine market leadership.

Nvidia’s Dominance with the H100 Hopper

Nvidia’s H100 Hopper is widely regarded as the leading AI accelerator in the market. Its performance capabilities have set a high benchmark for competitors, including AMD. The H100 Hopper is based on Nvidia’s latest GPU architecture, which is optimized for handling the demanding workloads of AI applications.

The H100 Hopper excels in several areas, including speed and efficiency. Its architecture is designed to maximize throughput while minimizing latency, ensuring that AI processes run smoothly and quickly. This performance advantage is a key reason why Nvidia continues to dominate the AI accelerator market, attracting major players in industries ranging from automotive to healthcare.

Another factor contributing to Nvidia’s lead is its extensive ecosystem of software and tools. Nvidia provides a comprehensive suite of AI development tools that are widely used by researchers and developers. This ecosystem not only supports the H100 Hopper’s hardware capabilities but also encourages widespread adoption across various sectors.

Why AMD Can’t Quite Catch Up

Despite its efforts, AMD faces significant challenges in surpassing Nvidia’s lead in AI accelerators. One major hurdle is the maturity and sophistication of Nvidia’s technology. Nvidia has a well-established track record in AI hardware, giving it a substantial advantage in terms of experience and expertise.

Additionally, Nvidia’s strong ecosystem of software and partnerships further solidifies its position. The combination of top-tier hardware and robust support tools makes Nvidia an attractive choice for businesses seeking to leverage AI technology. This network effect creates a barrier for competitors like AMD, who must not only match Nvidia’s hardware but also offer comparable software solutions.

Furthermore, AMD’s focus on energy efficiency, while commendable, may not be enough to sway potential customers. In the competitive landscape of AI accelerators, performance is often the deciding factor. Nvidia’s ability to consistently deliver superior performance gives it a significant edge, making it the preferred choice for many organizations.

The Future of AI Accelerators

The race to develop the best AI accelerator is far from over. Both AMD and Nvidia are likely to continue pushing the boundaries of what their hardware can achieve. For AMD, the challenge will be to bridge the performance gap with Nvidia while maintaining its emphasis on energy efficiency and cost-effectiveness.

Looking ahead, advancements in AI hardware are expected to drive further innovation in AI applications. As accelerators become more powerful, they will enable the development of more complex AI models, unlocking new possibilities across various industries. This evolution will likely fuel continued investment and competition in the AI accelerator market.

For businesses and developers, staying informed about the latest developments in AI hardware is crucial. Understanding the strengths and limitations of different accelerators can help organizations make informed decisions about which technology to adopt. This knowledge will be essential as AI continues to play an increasingly important role in shaping the future of technology.

Navigating the AI Accelerator Landscape

For those involved in AI development, selecting the right accelerator is a critical decision. The choice between AMD’s MI300X and Nvidia’s H100 Hopper depends on several factors, including performance requirements, budget constraints, and existing technology infrastructure.

For enterprises prioritizing raw performance, Nvidia’s H100 Hopper is often the preferred choice. Its proven capabilities and extensive ecosystem make it a reliable option for handling demanding AI workloads. However, for organizations focused on energy efficiency and cost-effectiveness, AMD’s MI300X may be an appealing alternative.

Ultimately, the decision should also consider the long-term goals of the organization. As AI technology continues to evolve, businesses must adapt their strategies to leverage new advancements. This adaptability will be key to maintaining a competitive edge in an increasingly AI-driven world.

Conclusion

AMD’s admission that its Instinct MI300X can’t quite match Nvidia’s H100 Hopper underscores the competitive nature of the AI accelerator market. While AMD has made significant strides with its MI300X, Nvidia’s established dominance and comprehensive ecosystem continue to set the standard.

For businesses and developers, understanding the capabilities and limitations of different AI accelerators is crucial. By staying informed and adapting to new advancements, organizations can harness the full potential of AI technology and drive innovation in their respective fields.

As we move forward, the ongoing rivalry between AMD and Nvidia will undoubtedly lead to further breakthroughs in AI hardware. For now, the race continues, and the future of AI accelerators promises to be both exciting and impactful.

FAQ: AMD Admits Its Instinct MI300X AI Accelerator Still Can’t Quite Beat Nvidia’s H100 Hopper

Q1: What is the AMD Instinct MI300X?

A1: The AMD Instinct MI300X is an AI speedup chip designed by AMD, which is purposed to accomplish versatile AI tasks like the aforementioned machine learning and deep learning.

Q2: How does the MI300X compare to Nvidia’s H100 Hopper?

A2: AMD has communicated that the MI300X can’t yet outperform Nvidia’s H100 Hopper on all fronts. The H100 is still better in some things, the corporation claimed.

Q3: What are the key features of the MI300X?

A3: Being very big in terms of memory, MI300X can multitask and can be used for lots of different things. Even the big AI process can be managed by it as it’s designed for that purpose.

Q4: Why is this admission from AMD important?

A4: This is proof that AMD wants the public to know which position they are in the chip race for AI. It also indicates that they are not giving up on NVIDIA.

Q5: Does this mean AMD is out of the AI chip market?

A5: Not really. AMD still has a significant market share in AI chips. However, they are practically transparent about their state of affairs.

Q6: What advantages does Nvidia’s H100 Hopper have?

A6: The H100 Hopper is famous for AI tasks in which it cannot be outperformed. Not only does it have a much larger amount of software support, which in turn involves peace of mind in AI projects.

Q7: Is AMD planning to improve the MI300X?

A7: We don’t have any official announcements about this, but it is highly probable that AMD is making updates. Tech companies are always in the pursuit of developing their products.

Q8: How might this affect the AI chip market?

A8: This will possibly result in more progress in the competition which as a classic situation in the industry enables the users to be able to have better products. What it still expounds is that the AI chip market is always in development.